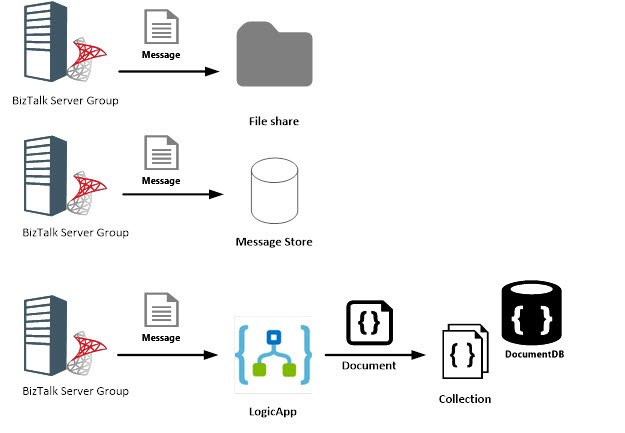

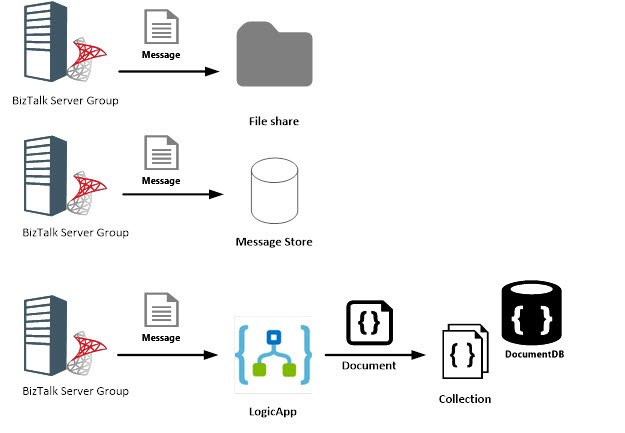

Archiving messages can be an interesting challenge with BizTalk. The product provides an out of the box capability for archiving BizTalk messages that leverages the BizTalk run-time tracking engine and BizTalkDTADb for persistence. Depending on the load the BizTalk environment the size of this database can grow quickly. And then you have to archive and purge the database before it becomes a bottleneck in performance. If the archive is of importance the data will be moved to another database or you could have a solution in place that instead of using the BizTalk tracking capability, you archive the messages directly to another store on a different server. The store could be a database or file share depending on the requirements and purpose for having the archived messages. A significant driver for archiving messages can be retention time i.e. having access to archived messages for months or perhaps even years.

Introduction

Traditionally offloading of archived messages was either through the purge/archive BizTalk Job and then move the backup of the tracking database or other mechanism to store the archived message to a file share or database. Today with Azure there are more options available to us and we could offload archived messages to Azure services like Storage (Table, Blob), SQL Azure or DocumentDB. A potential solution could be that a message (copy) at receive and send port is sent to the Azure Service Bus Queue and subsequently stored through a WebJob, or Logic App into DocumentDB or directly send to Logic App endpoint that would deal with the message, i.e. store into DocumentDB.

DocumentDB is a NoSQL database, built for performance i.e. fast. You can learn more about DocumentDB through

Introduction to DocumentDB: A NoSQL JSON Database.

Any custom solution for archiving BizTalk messages (in- and outgoing) will involve having a custom pipeline that will get a copy of the message and handle it according to the requirement you have, for instance store to file or send to Service Bus Queue or post it directly to Logic App endpoint. Either way you have to consider how to store the message with what type of meta data besides the message context or otherwise you will not be able to find the message.

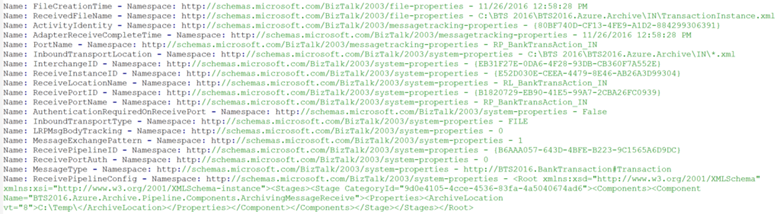

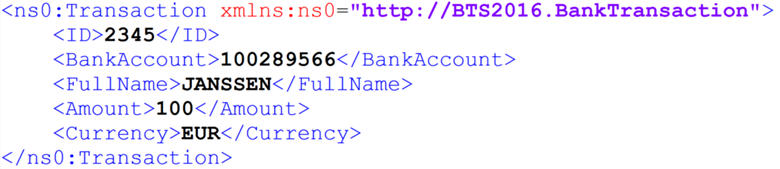

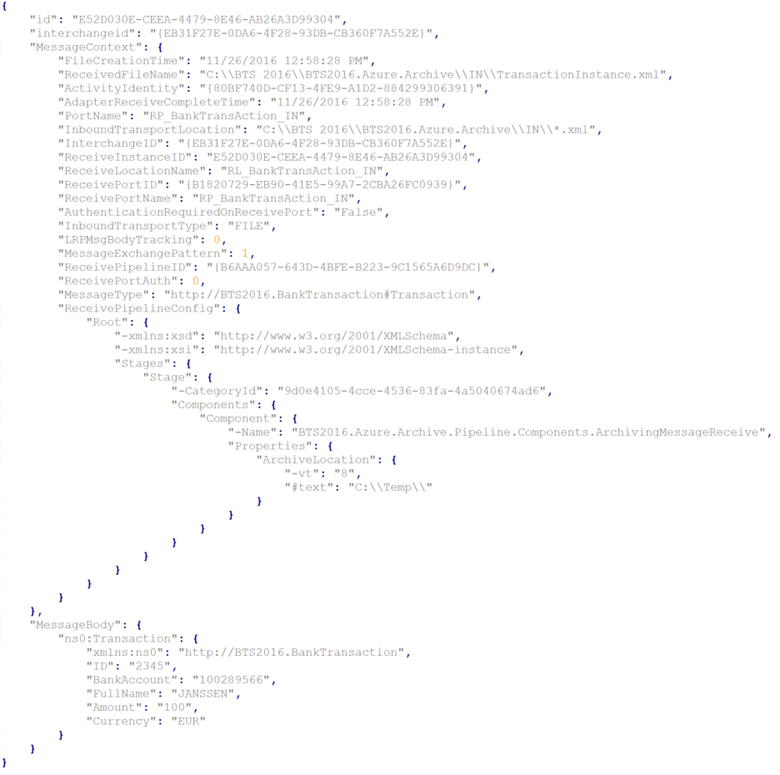

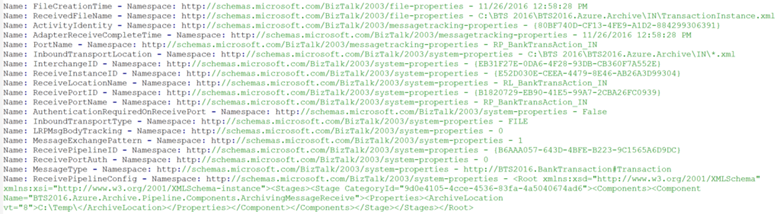

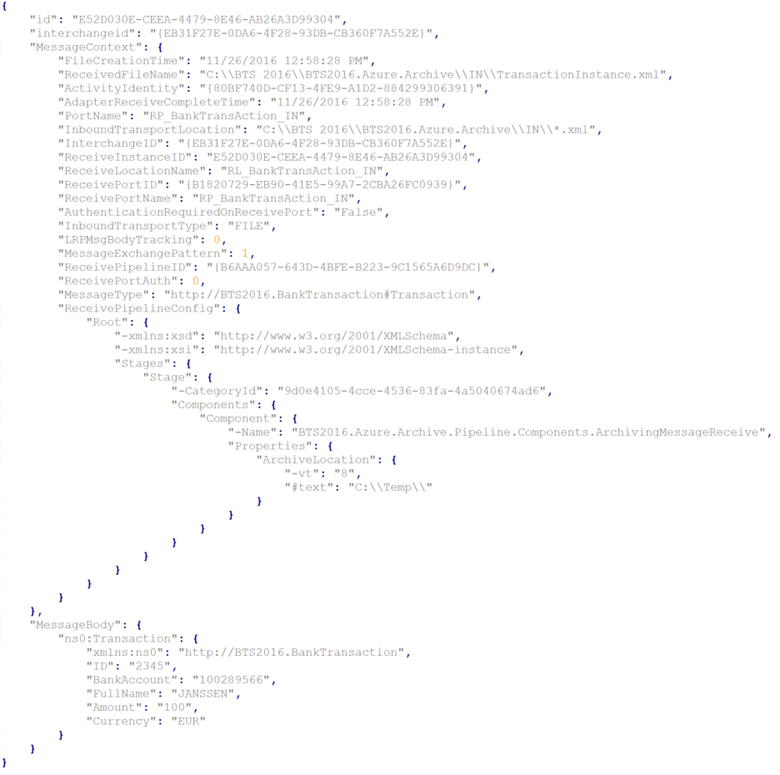

The output of a message received through FILE adapter would look like below.

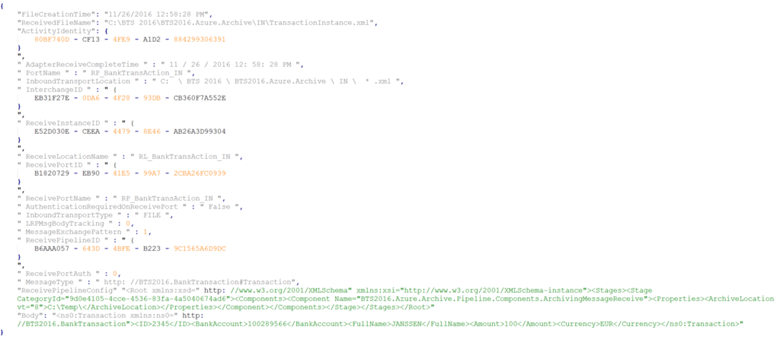

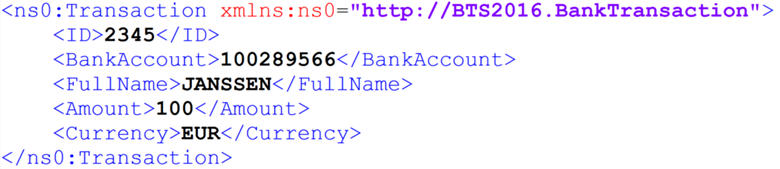

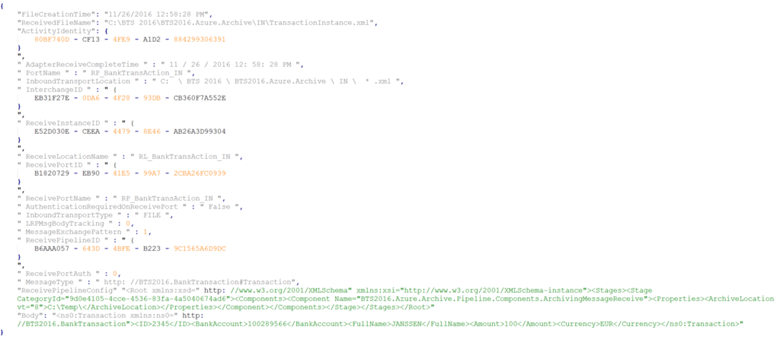

The name(s) are all context properties of the message and the XML is the payload i.e. body of the message. Suppose you push the above as a JSON message to an Logic App endpoint the format of the JSON would look like below.

To be able to insert messages into a DocumentDB collection, each document in DocumentDB needs to have an “id” property. If not present you will run into a specific error: “One of the specified inputs is invalid” in your DocumentDB connector in the Logic App. The payload (POST) to the Logic App endpoint will be as follows:

To be able to push the above to a Logic App with an HTTP Endpoint you will require to define a schema, which can be done using

http://jsonschema.net. You can learn about having direct access to a Logic App by reading the

Logic apps as callable endpoints document.

The Logic App

The new kid on the block in Microsoft’s integration portfolio is Logic Apps. And for those who do not know what a Logic App is; it’s a hosted piece of integration logic in Microsoft Azure. To be more precise the hosting is done in Azure in a similar way as a Web App and the logic is built by creating a trigger followed by a series of actions similar to a workflow. And you can simply create and built them in a browser reducing development and lead-time.

The first step in building a Logic App is provisioning it. In the new Azure Portal click the

+ sign, navigate to

Web + Mobile and subsequently click

Logic App. Or in the search box of the marketplace type Logic App. It’s easy to find the Azure Service in the portal to provision.

The next step is to specify the name of your Logic App, the subscription (in case you would have multiple subscriptions), the resource group the Logic App should belong to and the location i.e. which Azure data center. And subsequently decide if you want to see the Logic App on the dashboard or not and click

Create. You wait until the Logic App is provisioned to be able to proceed with building up the flow (trigger and actions).

Once the Logic App is provisioned, you have setup a service, also known as iPaaS (integration Platform as a Service). The Logic App is fully managed by Microsoft Azure and the only thing you need to do is add the logic i.e. specify the trigger and defining the actions.

Building the Logic App definition

Once the Logic App is provisioned, you can click on it and access the Logic Apps designer. In the Logic App designer, you can add a trigger. You can select various triggers like HTTP, Recurrence, WebHook, etc (see

Workflow Actions and Triggers). In our scenario, it’s the HTTP trigger, which I have to provide with a schema of the payload it can accept/expect. The schema can be generated using JsonSchema.net (

http://jsonschema.net), a tool that automatically generates JSON schema from JSON according to the IETF JSON Schema Internet Draft Version 4. JSON Schema will be automatically generated in three formats: editable, code view, and string. The generated schema can be pasted into the

Request Body JSON Schema part of the HTTP Trigger. Note that the URL is generated after the Logic App has been saved for the first time.

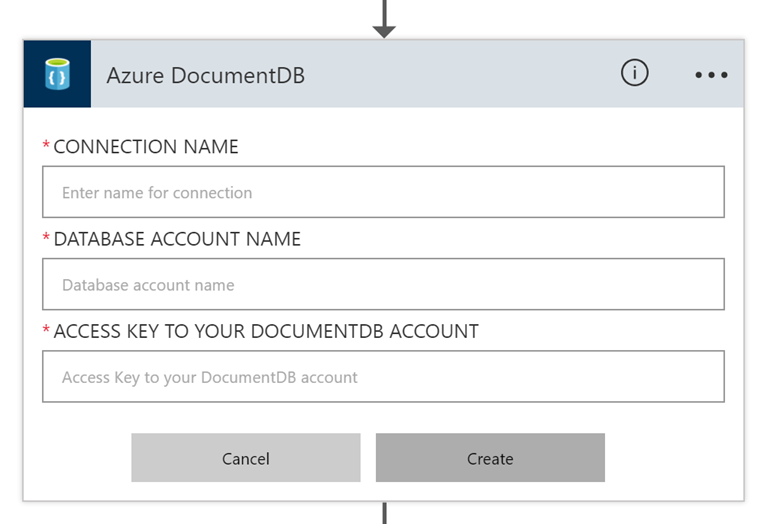

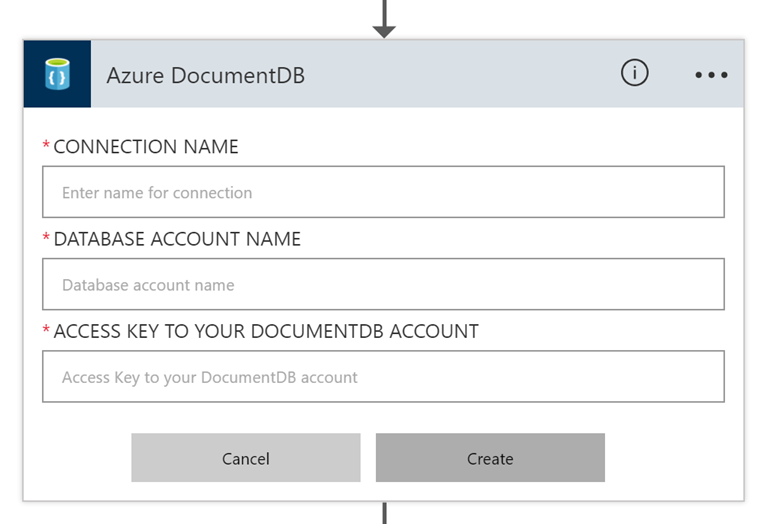

Subsequently, you add an action, in our scenario this will be a DocumentDB action i.e. the DocumentDB connector that is currently in Preview. Once placed under the HTTP trigger you have to specify the connectivity to the DocumentDB database.

You have to provide a meaningful name for the connection, specify the name of the database and access key.

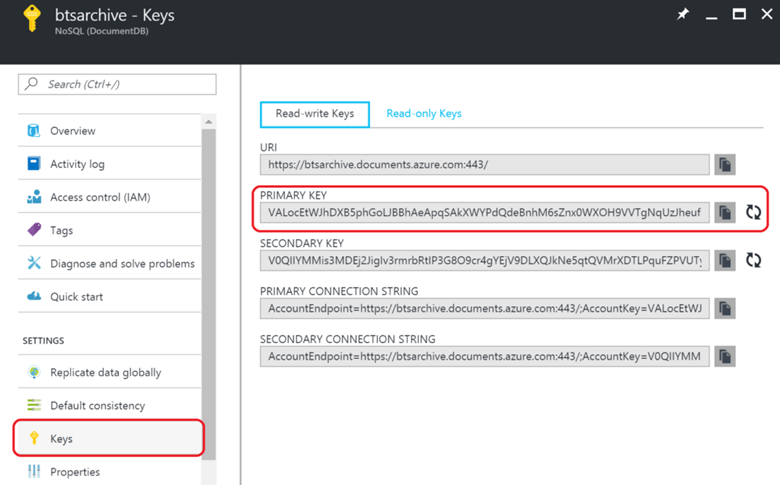

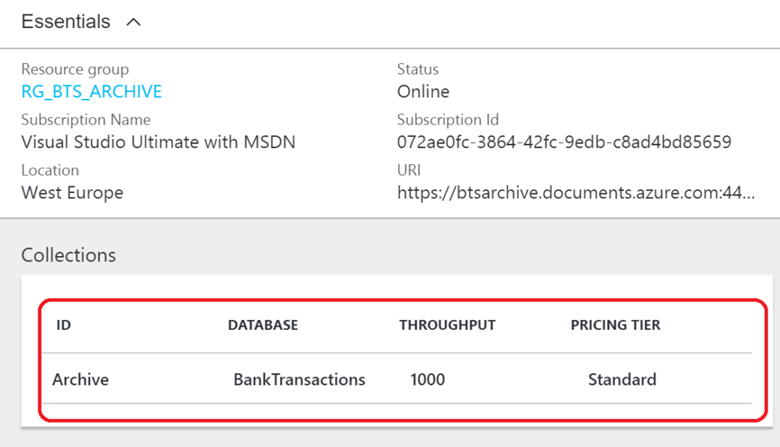

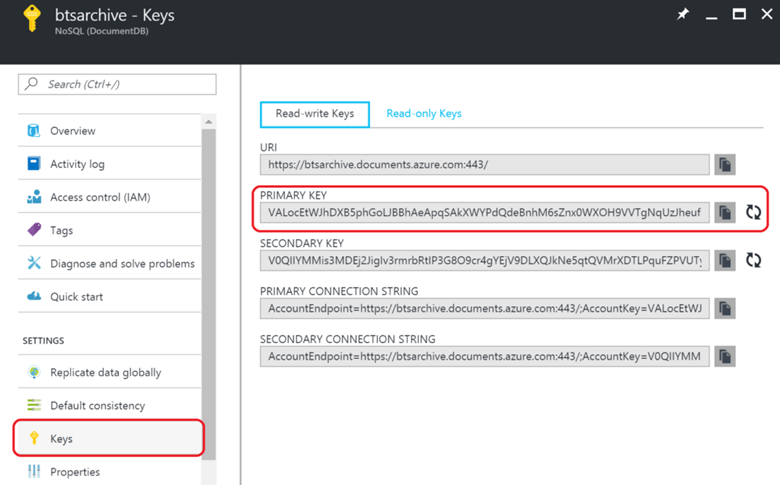

The access key can be found in keys section of the DocumentDB instance.

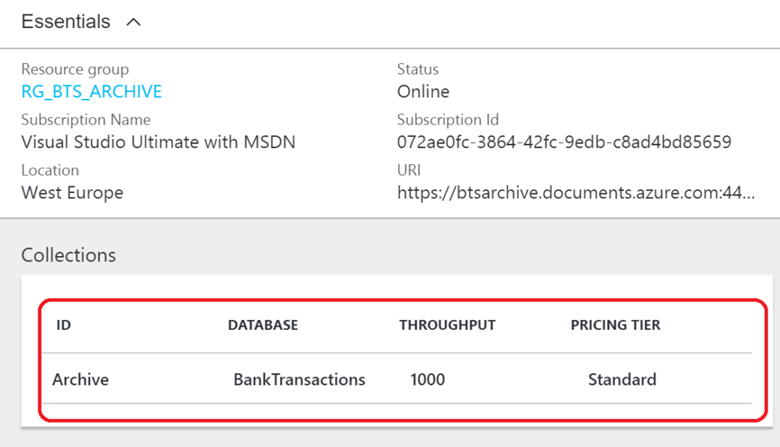

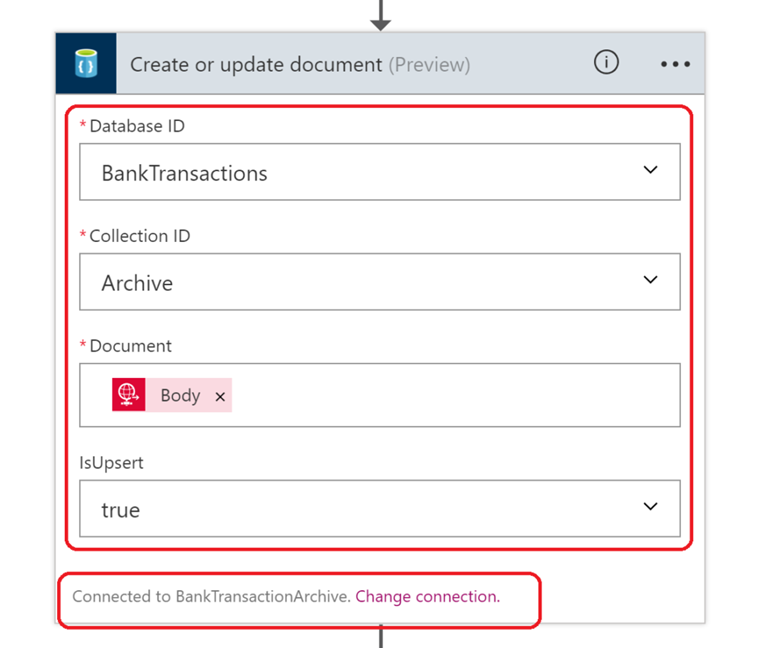

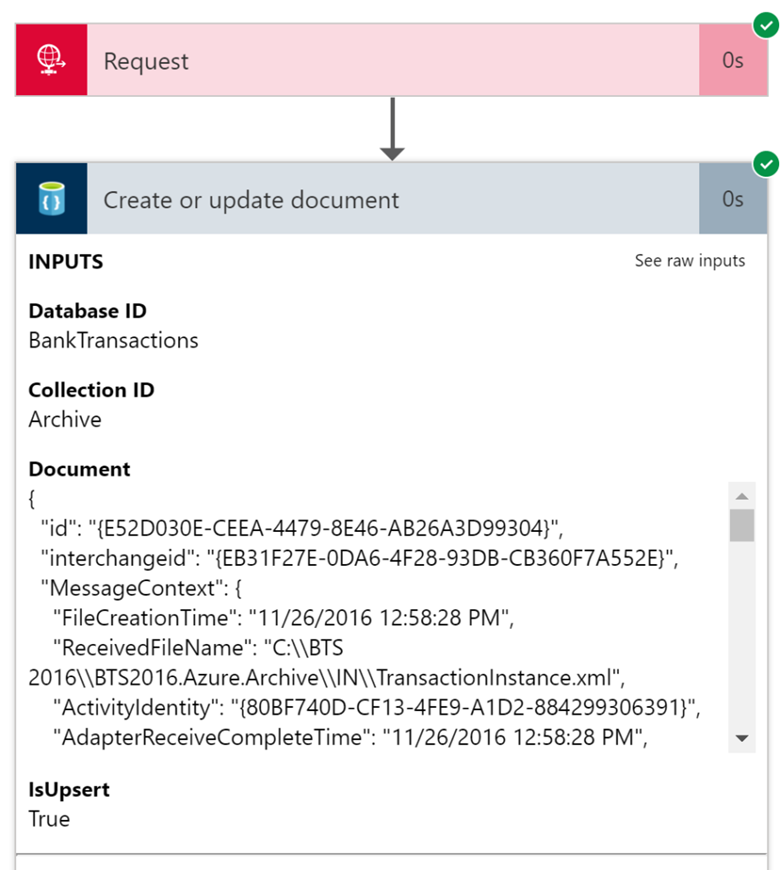

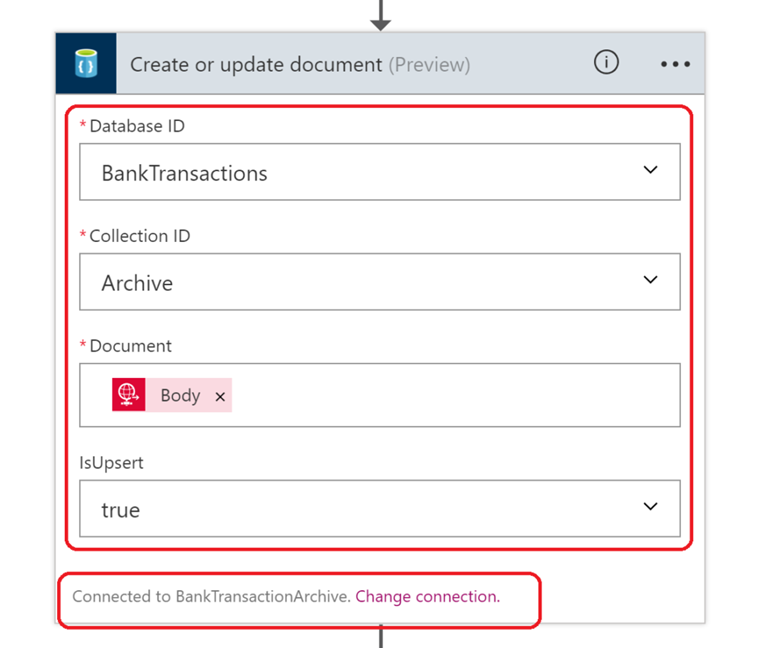

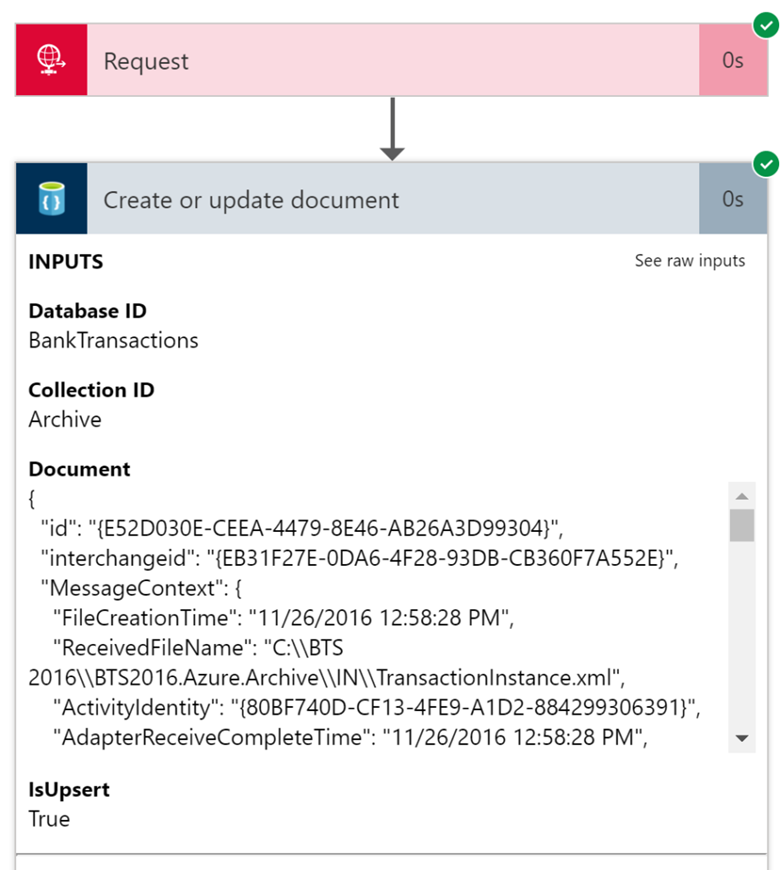

Once the connection is configured you can work with the connector by specifying the Database ID, Collection ID, and the Document you like to add, create or update.

As you can see in the picture above the connector works with the configured connection (

BankTransactionArchive) and is tied to the BankTransactions Database in the Archive collection. The document will be the body of the in flight message of the Logic App, i.e. the message that enters in the HTTP trigger.

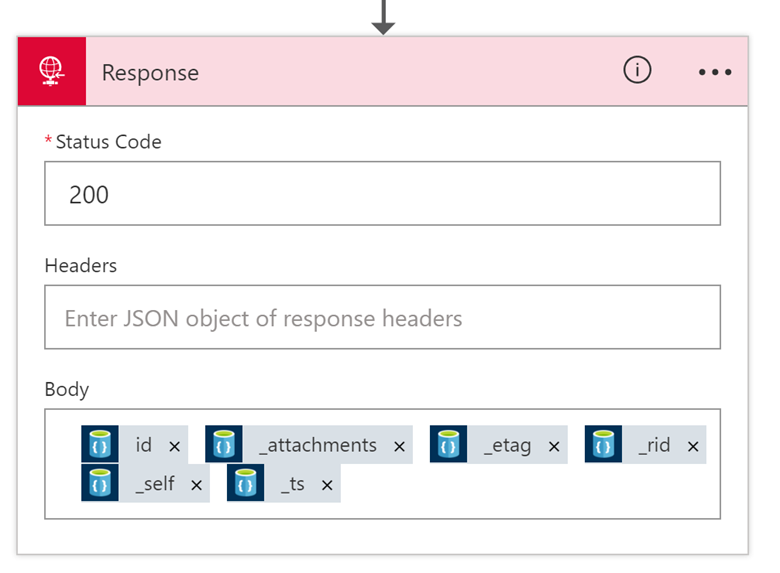

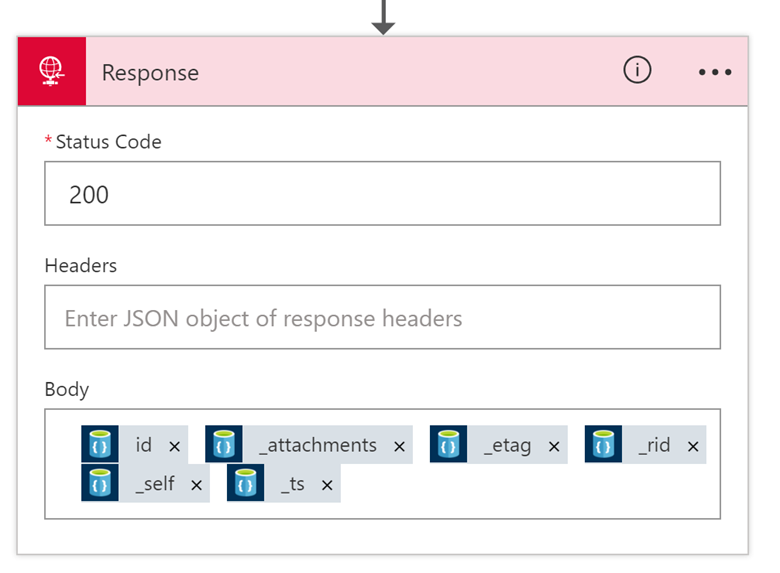

The final step is adding a response action, that contains the DocumentID (id), and other meta tags of the document i.e. system generated properties (see

DocumentDB hierarchical resource model and concepts). The status code is a mandatory field that has to be specified in this action. I have added the body of the response of the HTTP action.

After the last step, you can save the design (i.e. Logic App definition). Once you are done with your design or after one of two steps you can save your flow (definition). And in the designer, you also have to ability to look at the code i.e. JSON. If necessary you can manually edit the JSON in the so-called Code View, like adding a retry policy in a HTTP action (see HTTP Action

documentation).

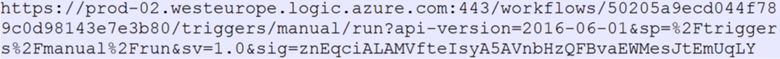

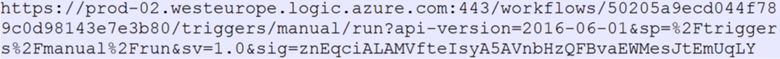

The logic (flow) in the Logic App has been defined and can be tested by posting a request (payload) to the generated endpoint. The endpoint contains a SAS key (sig) in the query parameters used for authentication (see also

Logic apps as callable endpoints).

The sig value

znEqciALAMVfteIsyA5AVnbHzQFBvaEWMesJtEmUqLY will be validated before the logic app will fire. It is generated through a unique combination of a secret key per logic app, the trigger name, and the operation being performed.

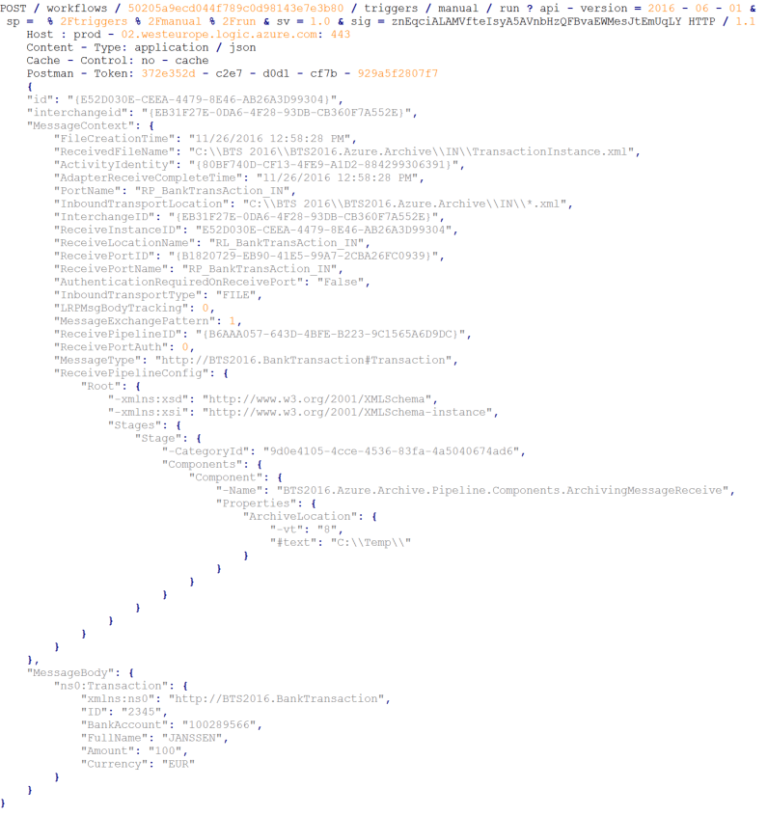

Logic App in action

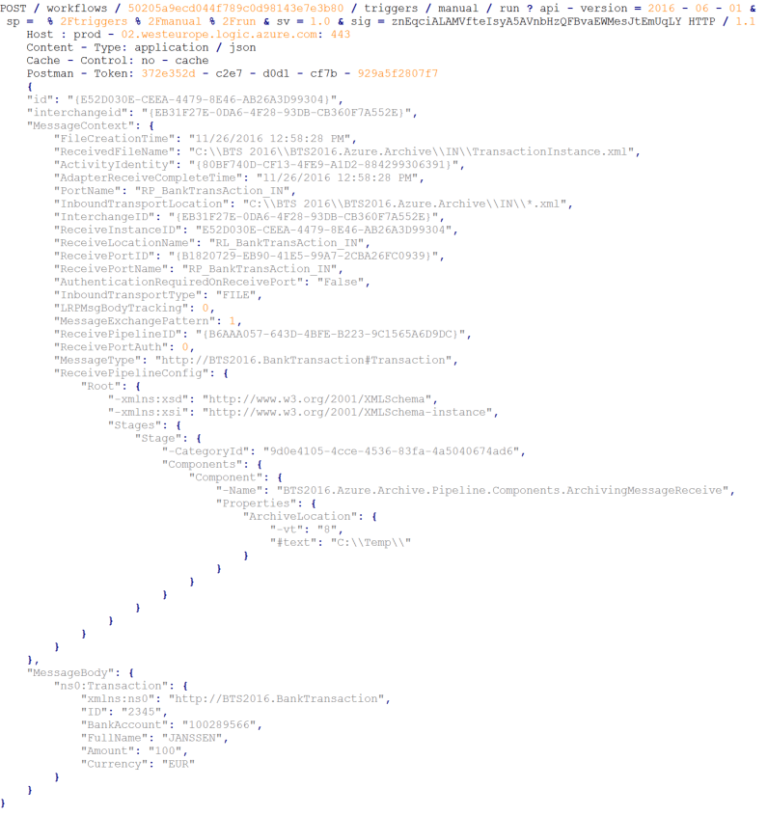

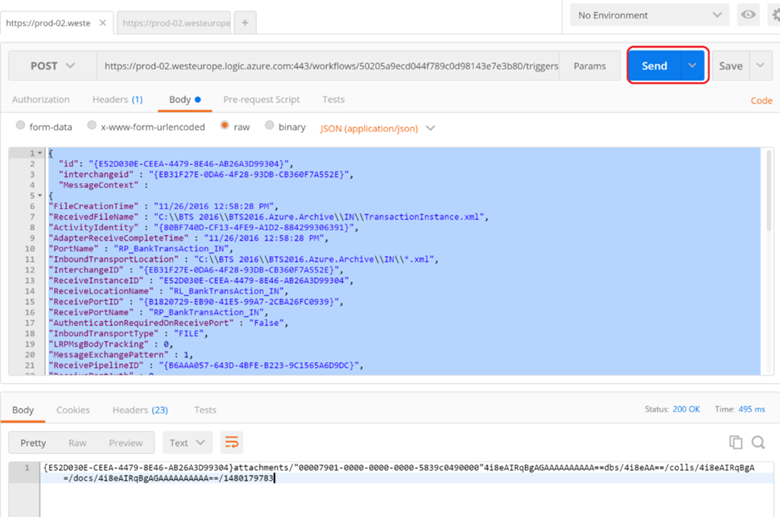

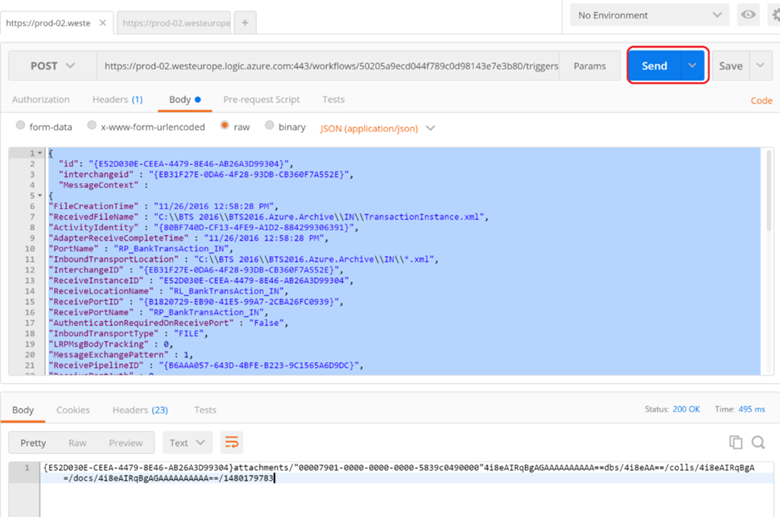

Our Logic App has been saved and is ready to run. Through Postman I am now able to post an archived message to the URL of the Logic App. The POST looks as follows.

The result of the POST is:

{E52D030E-CEEA-4479-8E46-AB26A3D99304}attachments/”00007901-0000-0000-0000-5839c0490000″4i8eAIRqBgAGAAAAAAAAAA==dbs/4i8eAA==/colls/4i8eAIRqBgA=/docs/4i8eAIRqBgAGAAAAAAAAAA==/1480179783

In Postman this looks like the picture below.

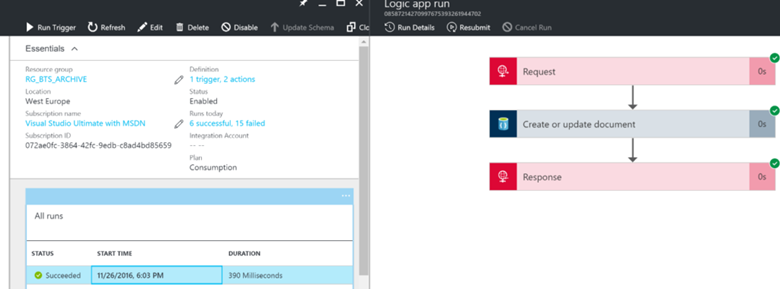

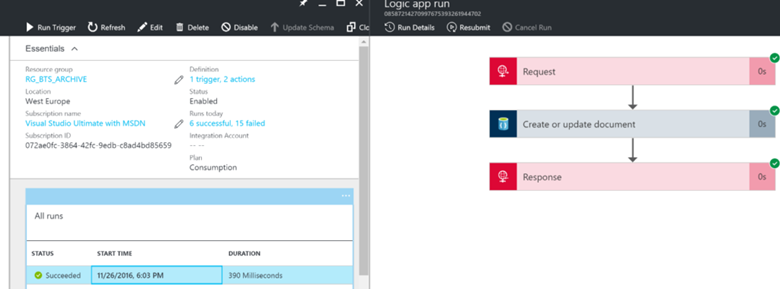

In the Logic App, I can verify/check the run.

In the Logic App, under All runs, you can select the run triggered by the POST request through Postman. On the right side a pane will appear with the Logic App definition i.e. trigger and two actions. You can click on each step to inspect what occurred.

This is a very useful feature of the Logic App to be able to follow exactly what happens inside the definition.

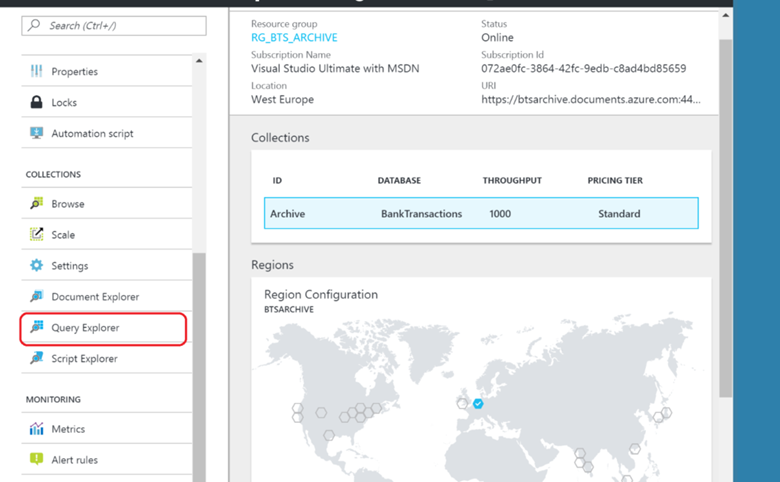

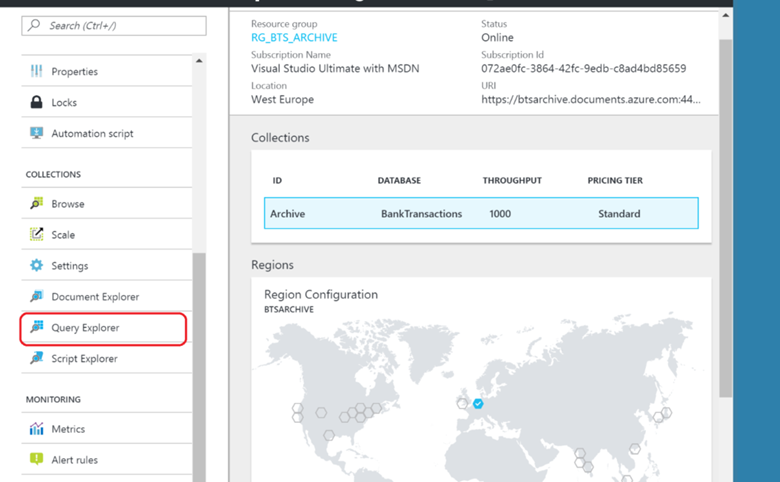

In DocumentDB you can query in the collection for archived messages with the same InterchangeID using the QueryExplorer.

The query will include a condition with the

InterchangeId. The InterchangeId is a context property of the message and as an incoming message progresses in BizTalk it is copied from one message to another throughout the process(es). In our scenario, it is copied from incoming to outgoing message leaving through a Send Port to File. Technically it is the MessageId of the received message that started the interchange. And if you need a single Id to group “everything” together, this is it!

Considerations

Archiving messages as documents in DocumentDB might look an easy option, however there a few things you need to consider:

- Scalability: What is the workload of your integration solution in BizTalk or BizTalk environment itself. The number of messages can build up to quite a substantial load for even DocumentDB. And there will be a cost factor too, as you might need to scale to partitioned collection in DocumentDB and look at a different SKU and pricing. See document Partitioning and scaling in Azure DocumentDB.

- Security: In this approach, we have not looked at securing the Logic App endpoints. By default, the endpoints are accessible by anyone, therefore you’ll need some type of security to prevent exploit of your archiving solution. There is a signature in the Logic App URL in our scenario, however one could consider this as “security through obscurity”, as the call to the Logic App could be intercepted, therefore it entirely depends on the security of the channel. An alternative would be to push the to be archived message first to a service bus queue and have it subsequently be picked up by the Logic App. The possible drawback of this approach is the message size (256Kb Standard or 1Mb Premium).

- Availability: A direct post from a pipeline to a Logic App might not be desirable, when the endpoint is not available or not able to process the number of messages. Therefore, you could persist the messages first on disk i.e. file share and have a process (service) processing the message to a suitable JSON format and push them out to the Logic App endpoints.

- Search: Once each message is stored in DocumentDB you would like to find them, preferably quick and easy. Indexing or setting up some kind of easy identifier (not a Guid like the InterchangeId we used) would be helpful. To each message, you like to archive you’ll probably have to add a few extra meta data tags.

- Extensibility: To present the to be archived message to DocumentDB it needs to be in a JSON format (document). Hence, the message needs to be converted to JSON as described in the article. To process the message to the required format extra functionality (code) needs to be created, either in the pipeline or separate service that process each message and pushes it out to the Logic App endpoint.

- Message Size: The approach for archiving the messages in DocumentDB would have a limitation on the size of the message. When the message is large, in this context 500Kb, then the amount of storage can easily run into the Gb’s and partitioning of collection would be required, hence increase in cost. See also pricing in DocumentDB using the calculator. Based on your scenario and requirements you have to look at the size of the message, workload and persistence.

- Retention: How long do you want to keep the messages? With DocumentDB you can apply a retention policy, which is nice. However, the longer you like to keep the messages i.e. documents in your collection the size of your collection will grow (see message size).

Conclusion

In this blog post, we discussed an approach to archive messages from a BizTalk environment or BizTalk application into a DocumentDB using a Logic App. We did not discuss the logic to process i.e. parse an archived BizTalk message (message context and body) into JSON format and push to the Logic App endpoint. The approach for this can be several, for instance as mentioned in the post write messages to a file share and subsequently parse and push them to the Logic App. The Logic App has the responsibility to create a document in a DocumentDB database with the payload. There are several aspects to take into consideration as explained in the post on this approach. An archiving strategy for BizTalk is mandatory if the business requires traceability of messages and insight into the message flows of processes supported by BizTalk. And the approach explained in this post could be an option or provide food for thought when discussing the strategy.

DocumentDB is a NoSQL database, built for performance i.e. fast. You can learn more about DocumentDB through Introduction to DocumentDB: A NoSQL JSON Database.

Any custom solution for archiving BizTalk messages (in- and outgoing) will involve having a custom pipeline that will get a copy of the message and handle it according to the requirement you have, for instance store to file or send to Service Bus Queue or post it directly to Logic App endpoint. Either way you have to consider how to store the message with what type of meta data besides the message context or otherwise you will not be able to find the message.

The output of a message received through FILE adapter would look like below.

DocumentDB is a NoSQL database, built for performance i.e. fast. You can learn more about DocumentDB through Introduction to DocumentDB: A NoSQL JSON Database.

Any custom solution for archiving BizTalk messages (in- and outgoing) will involve having a custom pipeline that will get a copy of the message and handle it according to the requirement you have, for instance store to file or send to Service Bus Queue or post it directly to Logic App endpoint. Either way you have to consider how to store the message with what type of meta data besides the message context or otherwise you will not be able to find the message.

The output of a message received through FILE adapter would look like below.

The name(s) are all context properties of the message and the XML is the payload i.e. body of the message. Suppose you push the above as a JSON message to an Logic App endpoint the format of the JSON would look like below.

The name(s) are all context properties of the message and the XML is the payload i.e. body of the message. Suppose you push the above as a JSON message to an Logic App endpoint the format of the JSON would look like below.

To be able to insert messages into a DocumentDB collection, each document in DocumentDB needs to have an “id” property. If not present you will run into a specific error: “One of the specified inputs is invalid” in your DocumentDB connector in the Logic App. The payload (POST) to the Logic App endpoint will be as follows:

To be able to insert messages into a DocumentDB collection, each document in DocumentDB needs to have an “id” property. If not present you will run into a specific error: “One of the specified inputs is invalid” in your DocumentDB connector in the Logic App. The payload (POST) to the Logic App endpoint will be as follows:

To be able to push the above to a Logic App with an HTTP Endpoint you will require to define a schema, which can be done using http://jsonschema.net. You can learn about having direct access to a Logic App by reading the Logic apps as callable endpoints document.

To be able to push the above to a Logic App with an HTTP Endpoint you will require to define a schema, which can be done using http://jsonschema.net. You can learn about having direct access to a Logic App by reading the Logic apps as callable endpoints document.

You have to provide a meaningful name for the connection, specify the name of the database and access key.

You have to provide a meaningful name for the connection, specify the name of the database and access key.

The access key can be found in keys section of the DocumentDB instance.

The access key can be found in keys section of the DocumentDB instance.

Once the connection is configured you can work with the connector by specifying the Database ID, Collection ID, and the Document you like to add, create or update.

Once the connection is configured you can work with the connector by specifying the Database ID, Collection ID, and the Document you like to add, create or update.

As you can see in the picture above the connector works with the configured connection (BankTransactionArchive) and is tied to the BankTransactions Database in the Archive collection. The document will be the body of the in flight message of the Logic App, i.e. the message that enters in the HTTP trigger.

The final step is adding a response action, that contains the DocumentID (id), and other meta tags of the document i.e. system generated properties (see DocumentDB hierarchical resource model and concepts). The status code is a mandatory field that has to be specified in this action. I have added the body of the response of the HTTP action.

As you can see in the picture above the connector works with the configured connection (BankTransactionArchive) and is tied to the BankTransactions Database in the Archive collection. The document will be the body of the in flight message of the Logic App, i.e. the message that enters in the HTTP trigger.

The final step is adding a response action, that contains the DocumentID (id), and other meta tags of the document i.e. system generated properties (see DocumentDB hierarchical resource model and concepts). The status code is a mandatory field that has to be specified in this action. I have added the body of the response of the HTTP action.

After the last step, you can save the design (i.e. Logic App definition). Once you are done with your design or after one of two steps you can save your flow (definition). And in the designer, you also have to ability to look at the code i.e. JSON. If necessary you can manually edit the JSON in the so-called Code View, like adding a retry policy in a HTTP action (see HTTP Action documentation).

The logic (flow) in the Logic App has been defined and can be tested by posting a request (payload) to the generated endpoint. The endpoint contains a SAS key (sig) in the query parameters used for authentication (see also Logic apps as callable endpoints).

After the last step, you can save the design (i.e. Logic App definition). Once you are done with your design or after one of two steps you can save your flow (definition). And in the designer, you also have to ability to look at the code i.e. JSON. If necessary you can manually edit the JSON in the so-called Code View, like adding a retry policy in a HTTP action (see HTTP Action documentation).

The logic (flow) in the Logic App has been defined and can be tested by posting a request (payload) to the generated endpoint. The endpoint contains a SAS key (sig) in the query parameters used for authentication (see also Logic apps as callable endpoints).

The sig value znEqciALAMVfteIsyA5AVnbHzQFBvaEWMesJtEmUqLY will be validated before the logic app will fire. It is generated through a unique combination of a secret key per logic app, the trigger name, and the operation being performed.

The sig value znEqciALAMVfteIsyA5AVnbHzQFBvaEWMesJtEmUqLY will be validated before the logic app will fire. It is generated through a unique combination of a secret key per logic app, the trigger name, and the operation being performed.

The result of the POST is:

{E52D030E-CEEA-4479-8E46-AB26A3D99304}attachments/”00007901-0000-0000-0000-5839c0490000″4i8eAIRqBgAGAAAAAAAAAA==dbs/4i8eAA==/colls/4i8eAIRqBgA=/docs/4i8eAIRqBgAGAAAAAAAAAA==/1480179783

In Postman this looks like the picture below.

The result of the POST is:

{E52D030E-CEEA-4479-8E46-AB26A3D99304}attachments/”00007901-0000-0000-0000-5839c0490000″4i8eAIRqBgAGAAAAAAAAAA==dbs/4i8eAA==/colls/4i8eAIRqBgA=/docs/4i8eAIRqBgAGAAAAAAAAAA==/1480179783

In Postman this looks like the picture below.

In the Logic App, I can verify/check the run.

In the Logic App, I can verify/check the run.

In the Logic App, under All runs, you can select the run triggered by the POST request through Postman. On the right side a pane will appear with the Logic App definition i.e. trigger and two actions. You can click on each step to inspect what occurred.

In the Logic App, under All runs, you can select the run triggered by the POST request through Postman. On the right side a pane will appear with the Logic App definition i.e. trigger and two actions. You can click on each step to inspect what occurred.

This is a very useful feature of the Logic App to be able to follow exactly what happens inside the definition.

In DocumentDB you can query in the collection for archived messages with the same InterchangeID using the QueryExplorer.

This is a very useful feature of the Logic App to be able to follow exactly what happens inside the definition.

In DocumentDB you can query in the collection for archived messages with the same InterchangeID using the QueryExplorer.

The query will include a condition with the InterchangeId. The InterchangeId is a context property of the message and as an incoming message progresses in BizTalk it is copied from one message to another throughout the process(es). In our scenario, it is copied from incoming to outgoing message leaving through a Send Port to File. Technically it is the MessageId of the received message that started the interchange. And if you need a single Id to group “everything” together, this is it!

The query will include a condition with the InterchangeId. The InterchangeId is a context property of the message and as an incoming message progresses in BizTalk it is copied from one message to another throughout the process(es). In our scenario, it is copied from incoming to outgoing message leaving through a Send Port to File. Technically it is the MessageId of the received message that started the interchange. And if you need a single Id to group “everything” together, this is it!