Artificial Intelligence (AI), Machine Learning (ML), Big Data, and BlockChain are some of the buzz words we hear more and more in recent times.

As a technology enthusiast, I’m personally fascinated by some of the technology advances that are happening in these areas and it’s mind-blowing to see the pace at which they are advancing. At the same time, it becomes almost a commodity these days and is available for everyone to take advantage of it with a fraction of the cost — thanks to all the public cloud providers like Microsoft, Amazon, Google, and IBM.

These days customers expect every software product to have some level of AI & ML. In this article, let’s take a closer look at whether AI & ML can be used for BizTalk Server Monitoring and what are the practical challenges.

About a year ago, we looked into what AI & ML can do for BizTalk Server Monitoring in BizTalk360. I was not completely convinced that we can magically solve the BizTalk Server Monitoring problems using machine learning alone at that stage. Here are some of the scenarios that strike me and the answer to these challenges are not straightforward.

I don’t want to sound too negative about using AI and ML for the monitoring solution. There are certain use cases where AI and ML based solution will be far superior to manual solutions (check out the last section of this article). However, I just wanted to highlight some real-world challenges from my 15+ years of experience working with BizTalk Server.

Let’s first understand the basics. Machine learning is a class of algorithms that can learn from and make predictions on data. Generally speaking, the more data you have, the better the outcome for machine learning techniques. Machine learning doesn’t require users to set explicit rules like “if this, then that.” It will make that determination on its own, based on the data and algorithms. There are three things here, the data, compute power and types of algorithms (models) which helps you to predict the outcome.

The quality of data you have determines the quality of judgment in machine learning. So typically, you’ll start collecting all the available data metrics over a period of time to plot a predictive graph. You will start having a baseline (higher and lower bound for a period) as acceptable values for each measurable metric.

If there is any drastic change in the underlying conditions, then the whole algorithm needs to relearn.

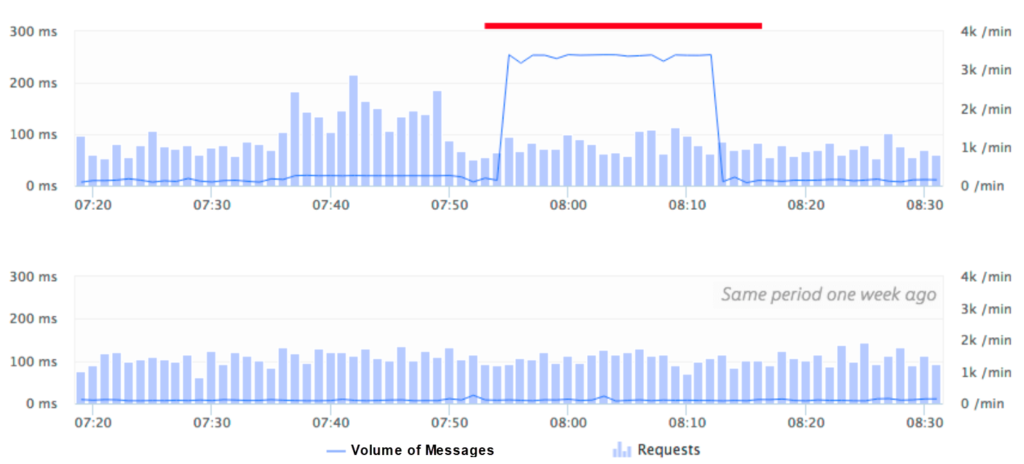

Let’s start with an example. In BizTalk (or Middleware world), the volume of messages passing through the system is crucial and one of the important things to monitor. We encounter the following scenario quite often. If you are using an AI based system, the period marked in red (in the below image) will be triggered as a violation and you’ll get alerted since it’s deviating from the baseline significantly.

The system basically works on the intelligence of the data it collected over the past weeks/months and blindly assumes that the volume of messages is getting deviated from the baseline volume.

In theory, this looks fantastic. However, in reality, this could be a big problem in a middleware solution. Situations like this where the volume of messages are either higher than normal or lower than normal are common on a middleware platform and we call this a “floodgate or drain scenario“.

We can probably keep training the system saying this is a known volume, but how often can you do it. So, you’ll get the false alert first, the admin person needs to teach the system that it is the expected volume, the system understands it next time. However, what happens if the floodgate happens in another time instance, again the system admin needs to correct it.

In case your business has unpredictable burst scenarios frequently, the AI system will struggle to cope. It’s one of those things, like a demo scenario where everything runs smoothly, the system performs fantastic, but the real world is far from the demo scenario.

I mentioned in the beginning “data is the king” in an AI based system. It’s like teaching a child — the child keeps learning every day based on what they see, what they hear, what they experience and so on. In a similar way, the AI system (if implemented well) will keep learning all the new patterns that emerge in the system, keep learning and tune itself. But, there is a challenge when the underlying platform changes dramatically then all the old learning might be completely invalid.

Let’s take an example! The production BizTalk Environment was set up and tuned for six months or so, there were a lot of manual override corrections for AI learning and everything is working fine. Suddenly, there is a business requirement saying they are expecting a huge increase in the volume of transactions and they wanted to increase the hardware capacity. This could simply be adding more servers to the BizTalk group (BizTalk or SQL Servers), increasing the processor capacity like higher performing CPU’s, more memory on BizTalk or SQL servers, changing the SAN disks and so on.

Now all the learning and tuning you have done for the last 6 months is completely wasted and the system has to be re-trained for a period of another 6 months to get to the same level with all human intensive manual tuning.

No one can simply judge what level of performance the new system configuration is going to provide; it can only be learned gradually.

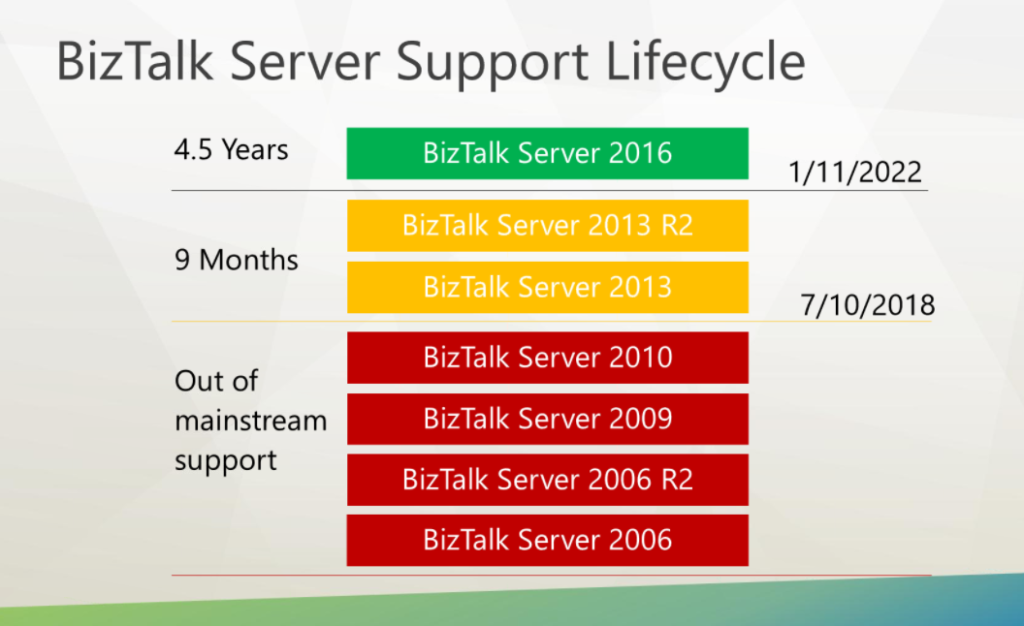

Another common and unavoidable scenario is the BizTalk Server upgrade. Example: Moving from BizTalk Server 2013 or 2013 R2 to BizTalk Server 2016. In fact, this is something that will be forced on you, since the mainstream support for every version will elapse after 5 years (10 years extended support).

BizTalk360 also supports the latest version, Microsoft BizTalk Server 2020.

Let’s say you are using BizTalk Server 2013 and depending on an AI powered monitoring solution. You are using the platform for 4 years and then decided to migrate to BizTalk Server 2016. In most of the cases, you will not just migrate your BizTalk Server; you’ll also migrate the OS and database from Windows Server from 2012 to 2016, SQL Server from 2012 to 2016.

This will change the complete underlying platform and make all of your AI learning so far for 4+ years completely invalid and you need to start from scratch. Can you afford it? By the time the team who had put together and tuned the solution in the first place might have left the company.

Pretty much every business has a special seasonal period in a year where things go upside down. In retail industries, it’s Black Friday and Christmas period and in Financial services industries, it’s typically month-end or year-end closing dates. Typically, most of these high-end businesses will have a system frozen/lock-down period where no changes are allowed.

When I used to work for a financial services client, the whole of March is locked down for 5th April, the year-end deadline in the UK. Almost 50-60% of the personal investments will be made during this period since everyone wants to take advantage of their personal tax allowances and customers typically leave it until the last minute.

How will an AI based monitoring system work under these circumstances? I can see there are only 2 choices — one, you educate the system saying it’s a special period, for this, it must have seen the scenarios earlier i.e at least one or two years period. In the first year it would have raised a false alarm all over the place, someone might have tuned it saying this is the expected behavior and in the second year, it will validate the condition and finally you might have a system that understands this unusual period and load provided nothing changed (like our hardware change) during this period.

The second option is simple — you simply turn off the monitoring for this period to avoid getting flooded with alarms.

A platform like BizTalk Environment is never a “set once and run it forever” platform. It will require regular manual interventions either for performance tuning or for operational reasons. Example: for performance reasons, you may be re-configuring the SQL Server database Auto-growth parameter, SAN configuration, purging and archiving settings for tracking and BAM databases and so on.

In a similar way, for operations reasons you may be periodically turning on and off certain things like BizTalk Send Ports, Receive Locations, Orchestrations, BizTalk Host instances etc for various reasons. A more concrete example, let’s say one of your external partners’ FTP server is down for maintenance. You will turn off the FTP/SFTP Send Port or Receive Location to that partner for time until the problem gets resolved.

In situations like these, what will happen to the AI based monitoring system? As I mentioned before “data is the king”, any changes to the system will have an impact on the AI interpretation of past data and it will start sensing wrong thresholds. The AI system needs to forget the past learning and relearn the new configuration (for few weeks/months), or someone needs to manually overwrite.

In a platform like BizTalk Server, monitoring just the high-level metrics like CPU%, Memory, Message Volume, Event Log error counts etc may not be sufficient. Let’s take an example scenario. You are integrating a bunch of external REST/HTTP or SOAP based web services with your BizTalk Orchestration. It’s important for you to keep an eye on the health of those external web endpoints like return status codes, response times, looking for specific value in the response JSON/XML content, you might need to verify multiple conditions in the response, you may need to wait for x-seconds/minutes before marking the system as down and so on.

Let’s take one more example. You may want to watch the growth of certain tables in SQL Server. Example: “select count(*) from spool” in the BizTalk MessageBox database. This is very specific and only a human BizTalk admin will know the importance of such custom metric.

This level of fine control on certain systems/metric will be challenging to achieve using an AI based monitoring system, since AI systems work on the volume of data and predictability. It might predict that the external system is down and responding slower, but it cannot have the granularity of control required as explained here.

Now comes the million-dollar question — where is my data going to reside? As I mentioned repeatedly, “data is the king” in any AI based system to deliver the promises. It needs to understand various patterns that emerge out of historical data to apply its intelligent algorithms. So where is the BizTalk Server metrics data going to reside, is it on-premise or in the cloud?

Even though it’s technically possible to collect and keep the data on-premise and use the AI system on-premise, most of the AI based systems are well suited for the cloud. Mainly because of the three important factors, requires huge data store, requires scalable compute power and finally, the algorithms will require constant tweaking and tuning.

If the data is hosted on-premise, then maintaining such a system with constantly updating algorithms become even challenging.

If the data is hosted in the cloud, it introduces regulatory challenges in industries like Healthcare, Financial Services etc. even though the AI based providers can claim only metadata information gets transmitted, how confident the customers will be.

It is surprising to see a company like Microsoft who are market leaders in machine learning and artificial intelligence not having any AI powered monitoring solutions. None of their monitoring products like Azure OMS (Operations Management Suite), Azure Log Analytics, Azure Application Insights, SCOM (System Center Operations Manager) etc., claim they can automatically detect and fix the problem using AI.

I highlighted Microsoft here, however, it’s true for other big players like Google, Amazon, and IBM (Watson) as well. They have an AI platform, but none of them focus on AI powered monitoring solution.

I’m sure it’s just a matter of time before these platforms get matured and we will see AI powered offering (there are already few bits and pieces), but it’s not going to be 100% fully automated replacement anytime soon. Still, you need that product and business knowledge to have a solid monitoring solution.

No, not at all. It’s important to embrace new technologies and move things forward. However, it’s also important to understand the limitations of such systems from a practical business point of view. There are some scenarios well suited for AI based predictive analysis and notification like identifying security vulnerabilities, unusual threat analysis like DOS attack, face & voice recognition, some element of monitoring which are static like general message volume, response times and so on.

Another area where I can see a real use case of an AI based solution is when it’s working on top of your business data. Example: volume of purchase orders you receive from various vendors over various periods. The AI system can learn about the volume and are not impacted by underlying platform changes (and keep re-learning).

In case of BizTalk Server Monitoring, I don’t see a clear answer to the challenges I highlighted above. If your platform is stable, nothing changes (like no hardware changes, no tuning, no new deployments, no burst scenarios), then AI will have a good chance of base lining your platform and detect and notify variations from baseline. But again, this is not practical for a live BizTalk Server Environment.

Being a market leader for the past 7 years in BizTalk Server Monitoring space, we consistently improve the product and add value to our customers. We invested heavily in a lot of areas which are practical and usable for enterprise BizTalk customers TODAY. We focused primarily on customer feedback (check out our BizTalk360 feedback portal) and most of our feature offering were customer driven.

I’ve written an article explaining how BizTalk360 addresses the challenges highlighted in this article to provide a robust monitoring solution for your BizTalk Server environments. You can download it here.